GIRL AI

Exploring feminized AI through playful design interventions. What happens when we reclaim the aesthetics of "girl culture" to critique tech?

Formats currently available, planned, or in testing phases.

Each workshop is an invitation into this space of playful subversion, participatory inquiry, and collective unlearning. Less screen time, more time walking around, making together, noticing things, and relating to each other. This is not about learning software, it's about making sense of technology otherwise.

Exploring feminized AI through playful design interventions. What happens when we reclaim the aesthetics of "girl culture" to critique tech?

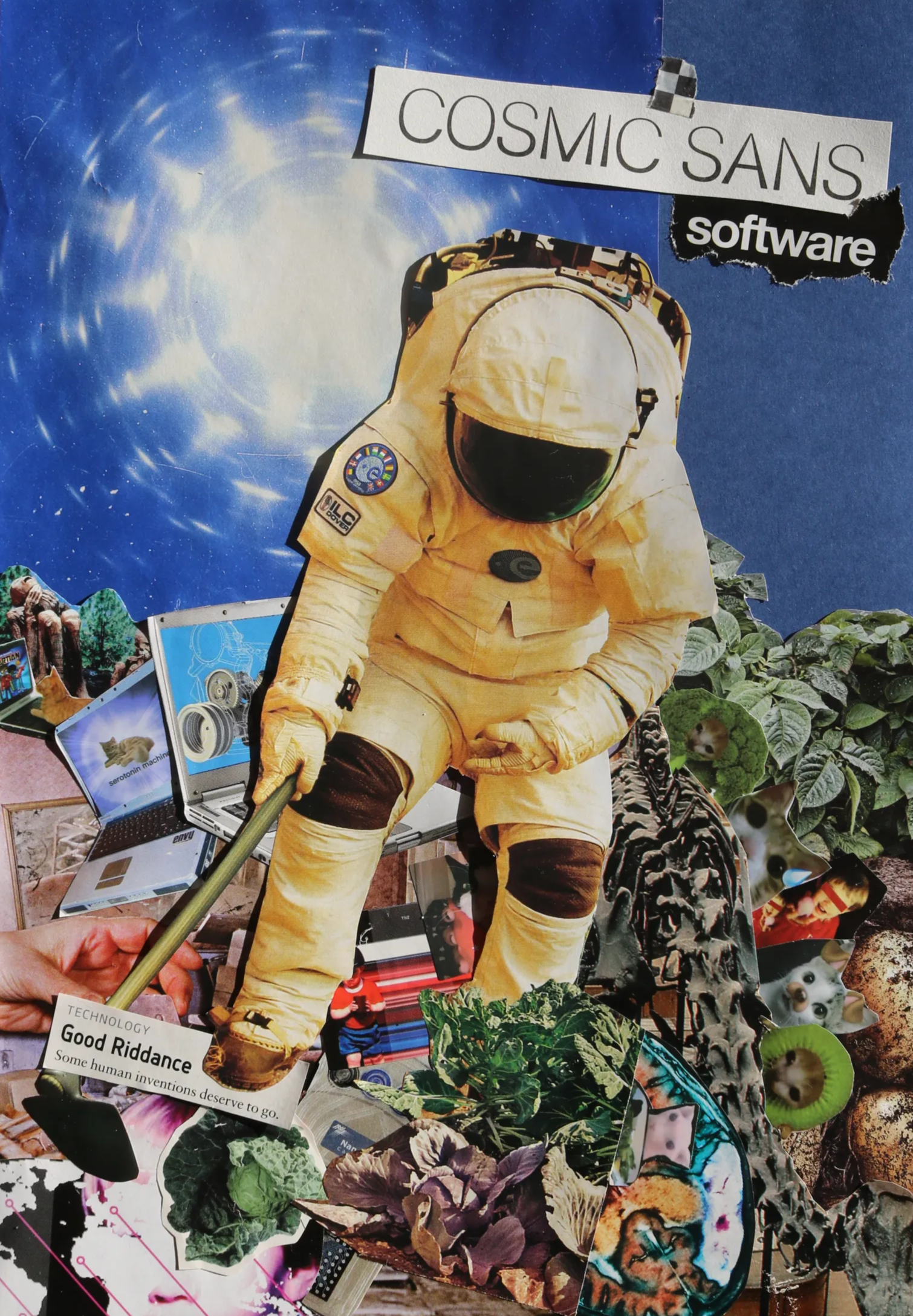

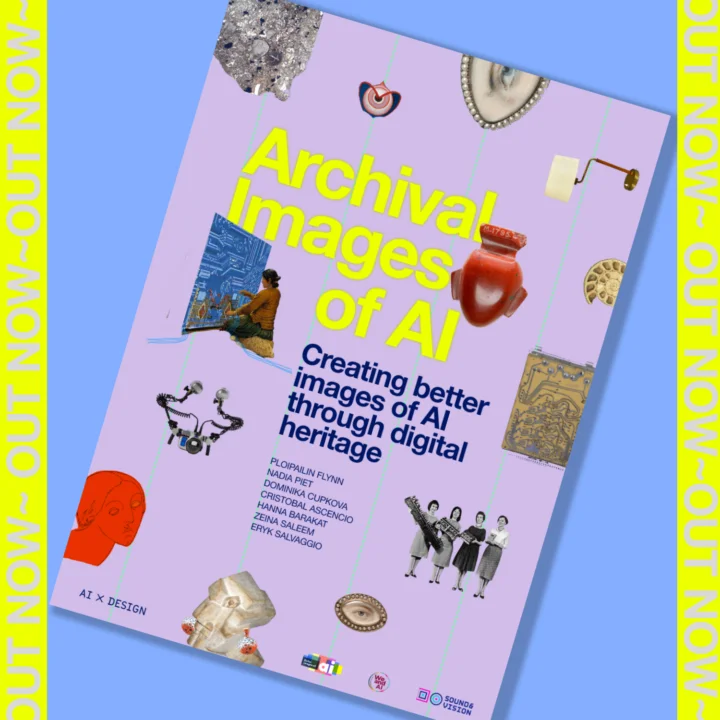

Collage workshop creating alternative visual representations of AI. Moving beyond the blue brains and robot hands to something weirder, more human.

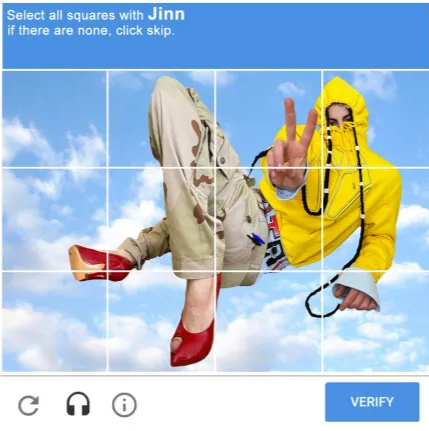

CAPTCHA image-making as a practice of creative refusal. What would training data look like if it was designed to confuse, mislead, or simply say no?

Collective storytelling session exploring the weird overlaps between conspiracy thinking and algorithmic recommendation systems. Bring your wildest theory.

Screenshots as personal archaeological sites. Excavate your screenshot drawer and turn private digital residue into shared material.

Low-tech image manipulation workshop. If deepfakes are about perfect deception, shallowfakes embrace the glitch, the obvious cut, the visible seam.

Coming soonFeel free to reach out if you would like any of these formats at your venue. Open to festivals, universities, local communities, and institutions. Each session can be adapted to different contexts, durations, and group sizes. You can also pitch your own workshop idea — let's see what weird things we can make together.

Get in touchEach session offers a different entry point: some begin with images, others with language, some take you outside the room or your own bubble. Together, they form a counter-syllabus for relating to technology in more imaginative and nuanced ways.

The workshops span textual to visual to embodied modalities. We're making sense of technology otherwise.

Sign up for the newsletter to get notified about upcoming public workshops in Lisbon and elsewhere.

Stay in the loopExcavating screenshot folders as personal archaeological sites — turning private digital residue into shared material through guided selection and digital collage.

.png)

Screenshots are personal artifacts not meant to be seen. They're taken quickly, often carelessly, without an audience in mind: saved recipes at 2am that you'll never try, NDA slides, group chat gossips, private obsessions, or just things we don't want to forget but don't yet know how to use.

Screenshots are our anti-algorithmic memory: highly biased, rather private, accidental archives of how we actually move through the internet when no one is watching.

This workshop treats screenshot folders as personal archaeological sites: messy drawers, highly subjective archives of attention formed outside algorithmic curation. Through guided selection and digital collage, participants will excavate their own screenshot drawers and through the custom made tool we explore the role screenshots play in our lives and their power to become sources of knowledge rather than clutter.

Together, we'll ask what these trinkets of the internet say about taste, memory, authorship, and refusal, and what happens when private digital residue becomes shared material.

Artwork by Ema Diehelová

Artwork by Ema Diehelová

Custom tool for this workshop

Custom tool for this workshop

In this workshop, we are unlearning the authority of the feed, the illusion of neutral relevance, and the assumption that algorithms should decide what is worth seeing, saving, or remembering.

Tracey Emin, My Bed, 1998

Tracey Emin, My Bed, 1998

No past sessions yet — this is the debut!

Playfully subverting big tech's algorithmic imaginaries through participatory design — exploring gender bias, feminist futures, and creative emancipation.

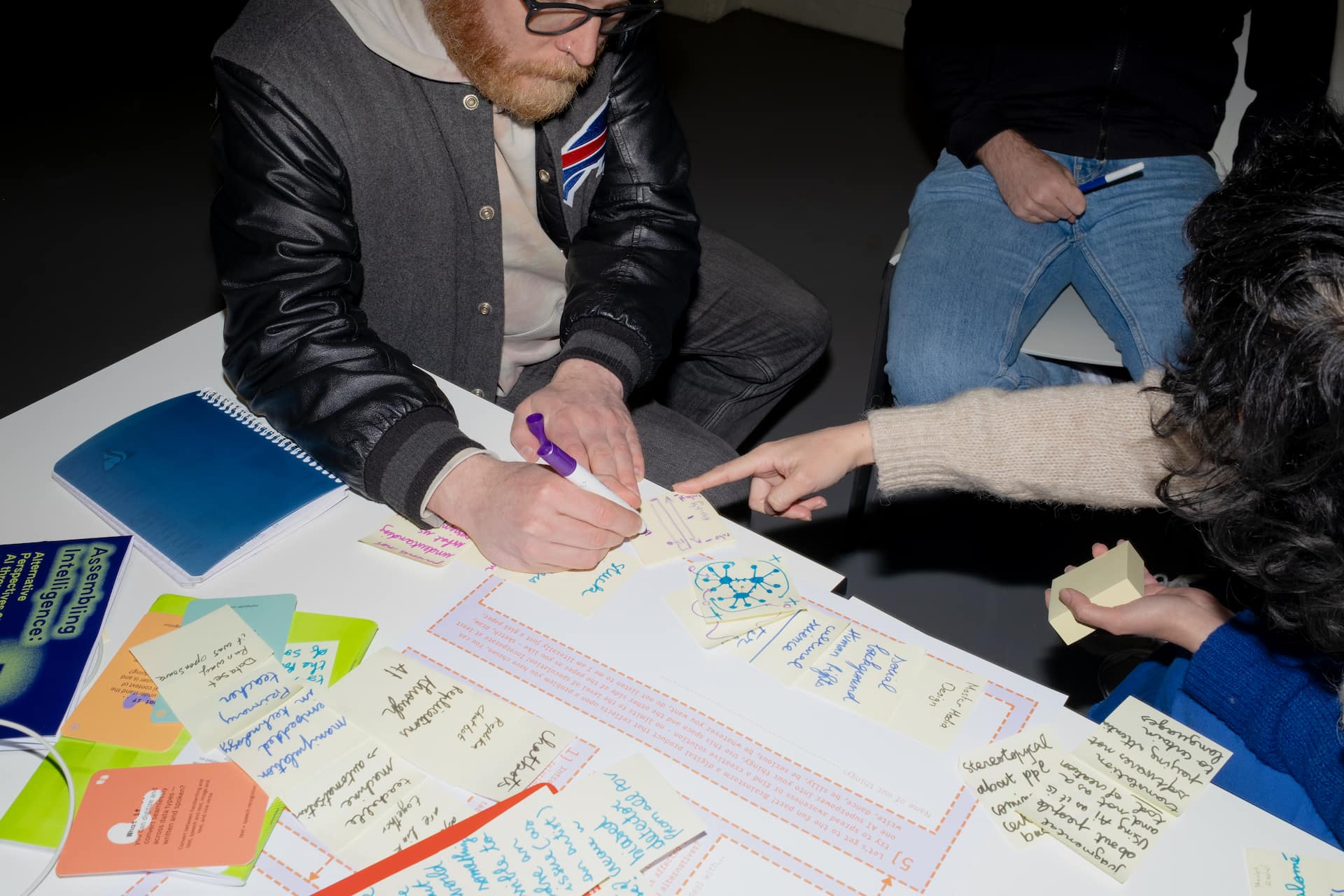

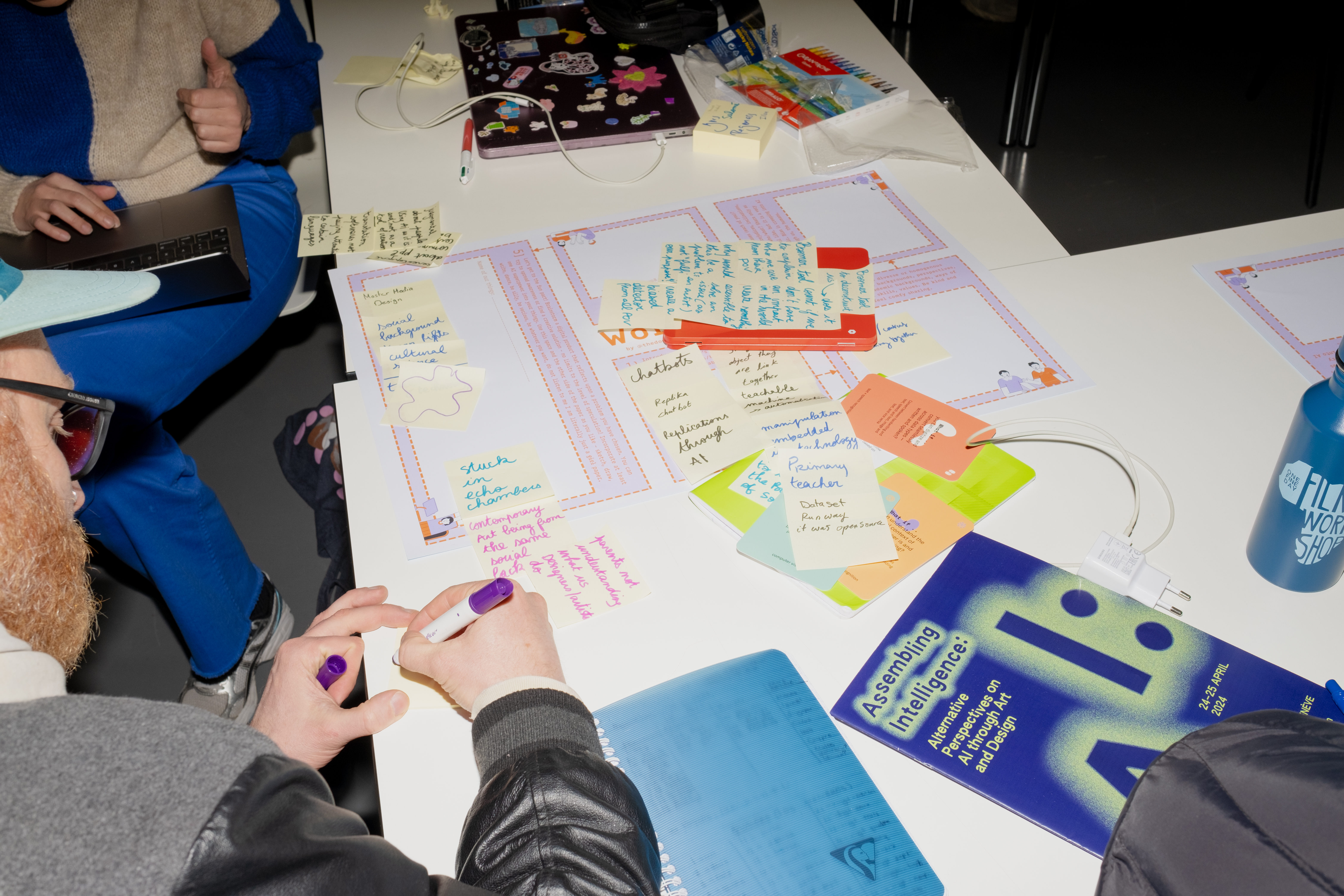

Workshop at Assembling Intelligence, HEAD – Genève, Switzerland 2024 ©Fabrizio Arena

Workshop at Assembling Intelligence, HEAD – Genève, Switzerland 2024 ©Fabrizio Arena

Nowadays, our virtual and real lives are almost completely interconnected. The boundaries between virtual and real, online and offline, and genders became blurry. Meanwhile, artificial intelligence is furiously entering the chat.

In the presence of dominant ideologies, new technologies and services do not really serve those in need, rather extending the range of exploitative practices. How can we fight against those practices and initiate social changes?

In this workshop, we aim to playfully subvert big tech's algorithmic imaginaries to ones that align with ideologies of agency and care. Through a participatory approach we explore problems that women and marginalised groups face nowadays within digital environments.

.jpg) Workshop at Adaptér Budapest, Hungary 2025 © Novák Doro

Workshop at Adaptér Budapest, Hungary 2025 © Novák Doro

Workshop at Assembling Intelligence, HEAD – Genève, Switzerland 2024 © Fabrizio Arena

Workshop at Assembling Intelligence, HEAD – Genève, Switzerland 2024 © Fabrizio Arena

That technology is neutral. That algorithmic systems serve everyone equally. That gender bias in AI is a technical problem with a technical fix. We unlearn the passivity of being “users” and practice becoming active shapers of the tools and systems around us.

Workshop at National Gallery Prague, Czech republic 2024 © Kristína Grežďová

Workshop at National Gallery Prague, Czech republic 2024 © Kristína Grežďová

No upcoming sessions scheduled — get in touch to host one.

Bye to glowing blue brains and glossy white robots, hi to softer, stranger, more situated images of AI. A hands-on collage session to collectively unlearn what AI is supposed to look like.

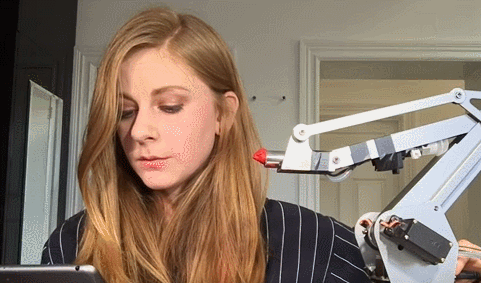

AIxDESIGN Festival: On Slow AI, Amsterdam, Netherlands (2025). Photo by Asia Giuliani.

AIxDESIGN Festival: On Slow AI, Amsterdam, Netherlands (2025). Photo by Asia Giuliani.

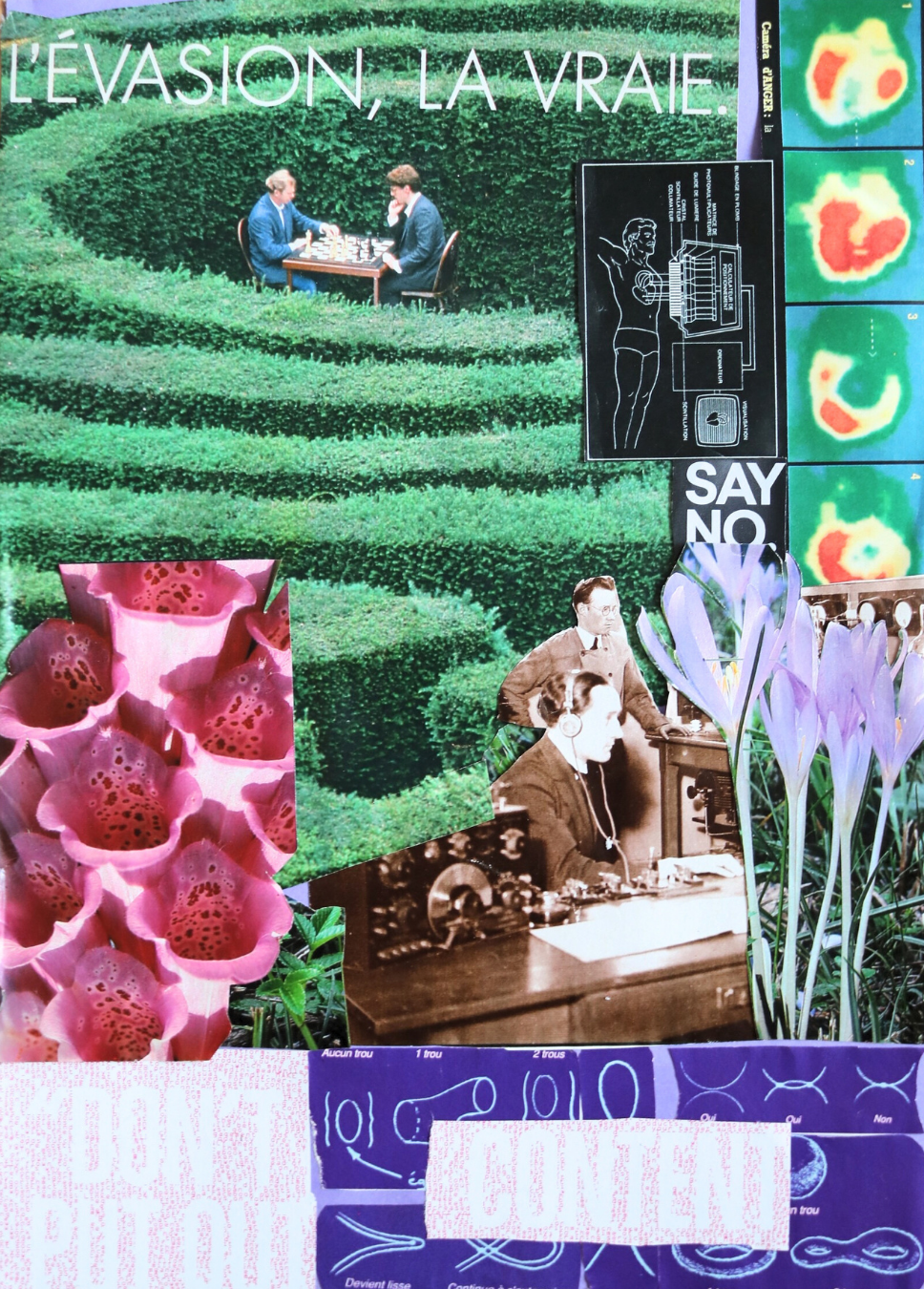

Stemming from the ongoing Archival Images of AI project developed within AIxDESIGN, this workshop is an invitation to collectively unlearn what AI is supposed to look like, and to imagine otherwise. In this hands-on (and fully offline) session, we'll reduce our collective screen time and pick up scissors instead.

Using the Archival Images of AI Playbook, we'll create new, messier, more situated representations of AI shaped by lived experience, not just machine fantasies.

Workshop results by Dominika Čupková.

Workshop results by Dominika Čupková.

Workshop results by Frederica Melo.

Workshop results by Frederica Melo.

The imaginary of AI as abstract, clean, and far away — and instead see it as already here, entangled in our drawers, screens, habits, and hands. We unlearn the idea that AI is neutral or inevitable, and reclaim our agency to picture it otherwise, pixel by pixel, paper scrap by paper scrap. Also: the idea that “serious” work doesn't include glue sticks.

No upcoming sessions scheduled — get in touch to host one.

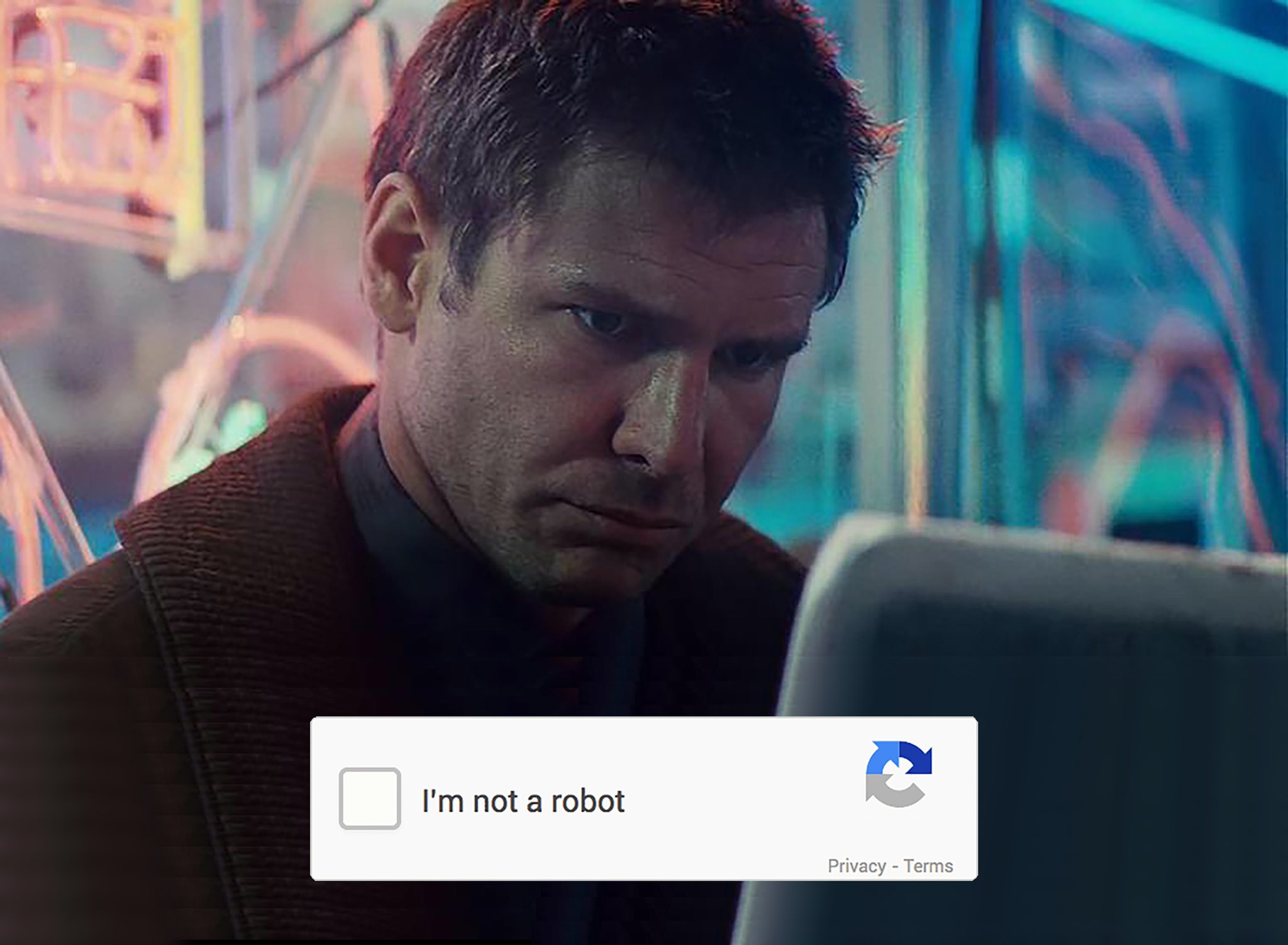

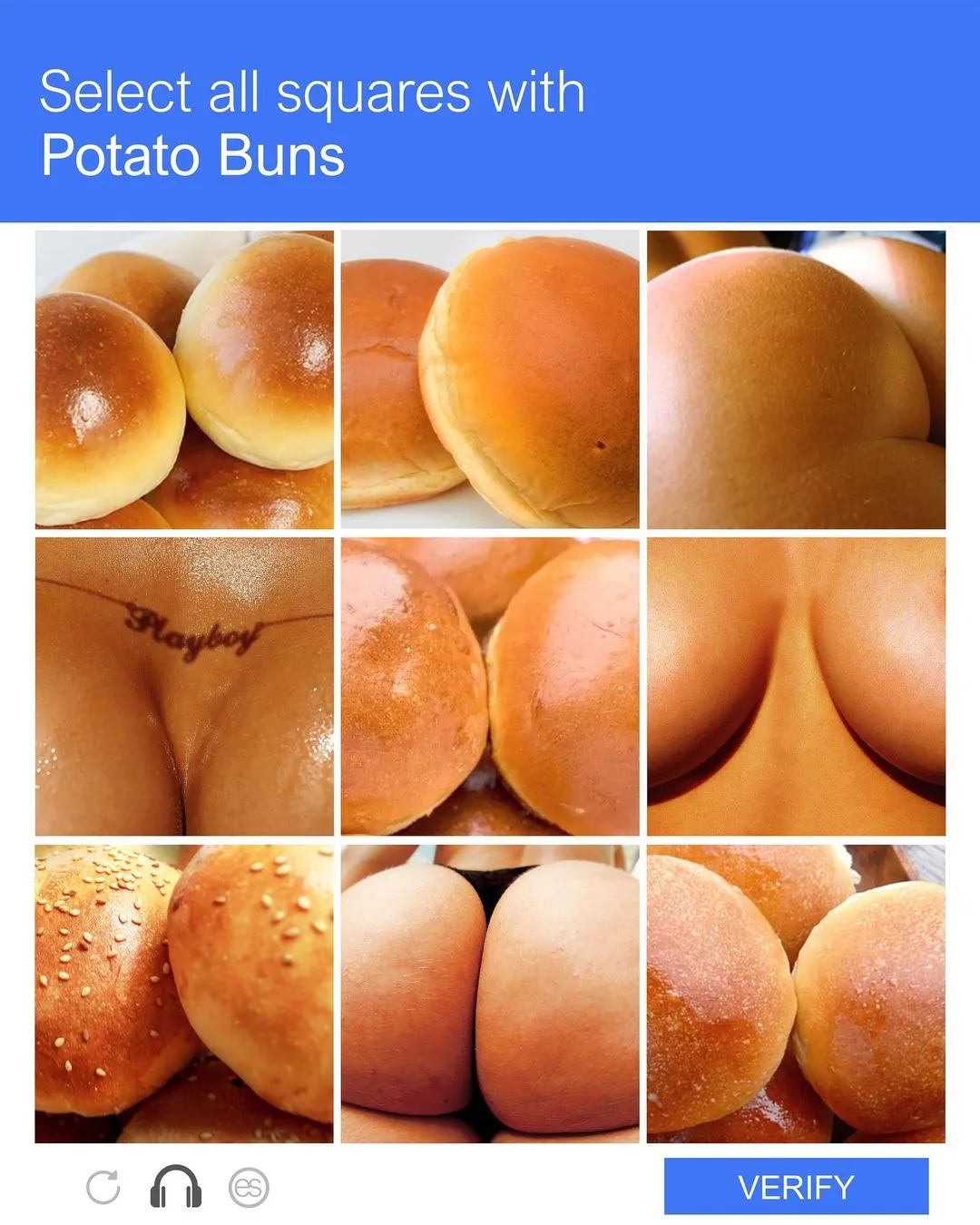

Walking, collecting, and making subversive CAPTCHAs that resist verification — turning algorithmic gatekeeping into a practice of creative refusal.

.png)

CAPTCHA is a type of security test used in computing to determine whether the user click clack clocking is human or a bot. CAPTCHAs act as gatekeepers, deciding who gets access based on specific criteria, usually visual recognition skills. This mirrors societal rules, where institutions define who is allowed to participate based on compliance with often invisible norms.

Instead of clicking through boxes to prove we’re human, what if we could invent our own tests of belonging?

CAPTCHURED is partly walking, partly making — a participatory workshop. Participants gather images from the surrounding environment to form personal datasets, then transform these into CAPTCHAs that resist recognition. The resulting works are invited to contribute to CAPTCHURED, a participatory artwork and growing archive of subversive puzzles that resist verification. Come walk, come make, come trouble the algorithmic gaze.

Custom tool for this workshop.

Custom tool for this workshop.

In this workshop, we are unlearning the neutrality of CAPTCHA, which reduces complex identities and ethical nuances to yes/no answers, the inevitability of algorithmic borders, and the notion that machines should define what it means to be human.

No upcoming sessions scheduled — get in touch to host one.

Conspiracies as collective storytelling — remixing, retelling, and transforming our shared paranoia into playful myth-making instead of debunking.

BYOC: Bring your own conspiracy is a participatory workshop where conspiracies are treated not only as mere misinformation but also mirrors of collective fear, folklore, and belief. They travel like rumours, mutate like myths, and reveal the hidden anxieties of a society.

Instead of debunking conspiracies, what if we looked at them as cultural myths, signals of our anxieties, and raw material for imagining otherwise?

In this workshop, conspiracies are not for proving or disproving, but for remixing, passing around, and transforming. Participants are invited to bring one conspiracy (from their uncle’s kitchen table, late night youtube feed, medieval witch rumour, or their own hunch about the neighbour’s dog). Together we’ll collect them into a living dataset, and then re-narrate through multiple formats. Conspiracies will mutate as they travel through groups, revealing how stories bend with each retelling and how machines amplify or censor collective fears. The final artefacts become a messy archive of our shared paranoia and playful myth-making.

Bring your own conspiracy. Bonus points if you lowkey believe it.

To see conspiracies not only as misinformation but as collective storytelling shaped by fear, folklore, and (machine) bias. By making and remaking them, instead of asking “is this true or a lie?”, participants learn to ask “what does this conspiracy tell us about us?” As well as the idea that conspiracies are just dangerous noise, and the assumption that machines only help us get to the “truth.” Instead, conspiracies are treated as cultural signals, myths-in-motion, and playful material for collective unlearning.

No past sessions yet — this is the debut!